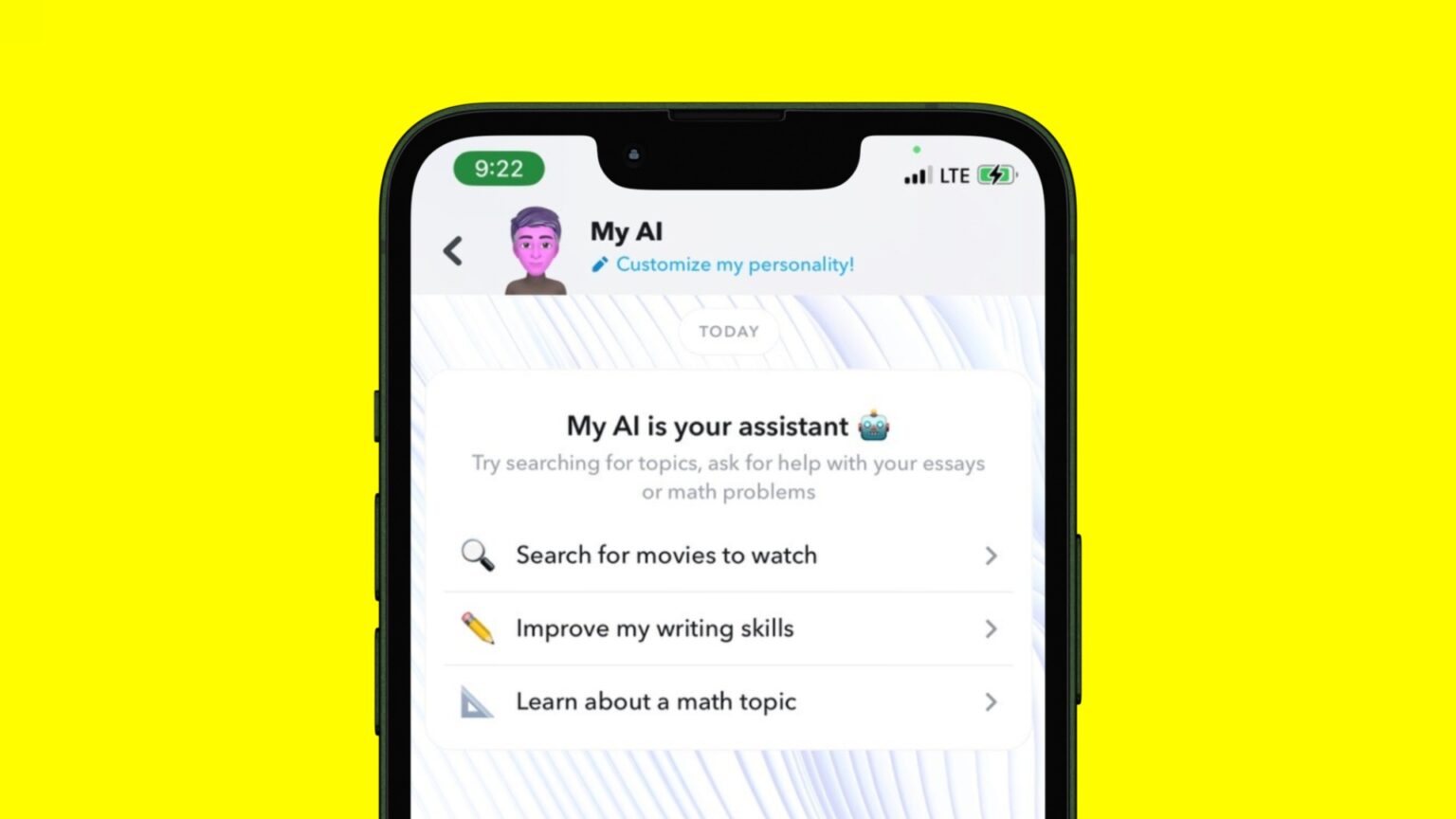

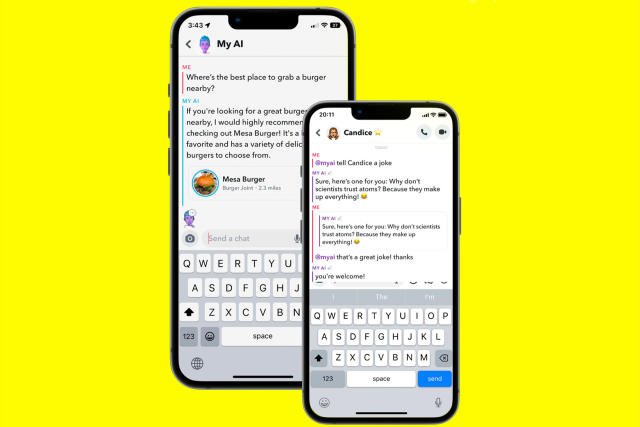

In April 2023, Snapchat launched “My AI,” an AI-powered chatbot feature developed in partnership with OpenAI’s ChatGPT. Although aimed at increasing user engagement, the chatbot has sparked significant controversy among parents, mental health experts, and lawmakers due to its potential risks, particularly when used by younger audiences. The mixed responses highlight a broader conversation about tech companies’ responsibilities in safeguarding young users.

The Concerns: Safety, Privacy, and Mental Health

Many parents are alarmed by Snapchat’s “My AI” due to specific incidents where the chatbot allegedly provided inappropriate advice or engaged in unsettling conversations. A major concern stems from cases where the chatbot reportedly suggested risky behaviours or responded to sensitive questions in ways that parents find unsuitable for minors. A parent from Missouri expressed her worries, saying, “It’s a temporary solution until I know more about it and can set some healthy boundaries and guidelines”.

Privacy concerns have also taken centre stage. Users have reported instances of “My AI” making personal comments about images, which raises questions about Snapchat’s data collection and storage practices. The UK’s Information Commissioner’s Office has flagged the platform for not adequately assessing privacy risks, especially when dealing with data from minors.

The Chatbot’s Emotional Influence on Teens

Another troubling aspect of the chatbot is its potential emotional impact. By design, “My AI” can mimic human conversation, which might blur boundaries for young users who may develop emotional attachments to it. Clinical psychologist Alexandra Hamlet points out that “having repeated conversations with a chatbot can erode teens’ sense of self-worth, even if they know it’s just AI.” She adds that teens may seek out certain chatbot responses that confirm unhelpful beliefs, making them more susceptible to negative mental health effects.

Steps Snapchat Is Taking

In response to these concerns, Snapchat has rolled out several parental control features and chatbot restrictions. Parents can now disable “My AI” through Snapchat’s Family Center, a hub for monitoring and managing their children’s interactions on the platform. Snapchat also built safeguards into the chatbot, such as age-appropriate responses and OpenAI’s moderation technology, designed to filter out inappropriate content.

However, some parents are sceptical about the effectiveness of these tools. The restrictions can only be enabled by parents, who rely on them having knowledge and control over their teens’ Snapchat settings. In addition, removing “My AI” from a user’s feed requires a Snapchat+ subscription, which costs $3.99 per month, a policy criticized by some parents as exploitative.

Lawmakers Call for Regulation

The debate over “My AI” has also attracted attention from lawmakers. U.S. Senator Michael Bennet recently addressed Snap and other tech CEOs, highlighting concerns that the chatbot could be providing advice on deceiving parents. In his letter, Bennet noted, “Although Snap concedes My AI is ‘experimental,’ it has nevertheless rushed to enrol American kids and adolescents in its social experiment”. This sentiment underscores the urgent need for federal regulation to keep pace with rapid AI advancements in apps popular among young users.