In a bold move that has sent ripples through the AI community, Anthropic has unveiled its latest creation, Claude 3.7 Sonnet, touting it as a groundbreaking AI model capable of “thinking” indefinitely. While the company’s claims are certainly ambitious, skeptics are questioning whether this new offering truly represents the next big disruption in artificial intelligence or if it’s simply another incremental step in an increasingly crowded field.

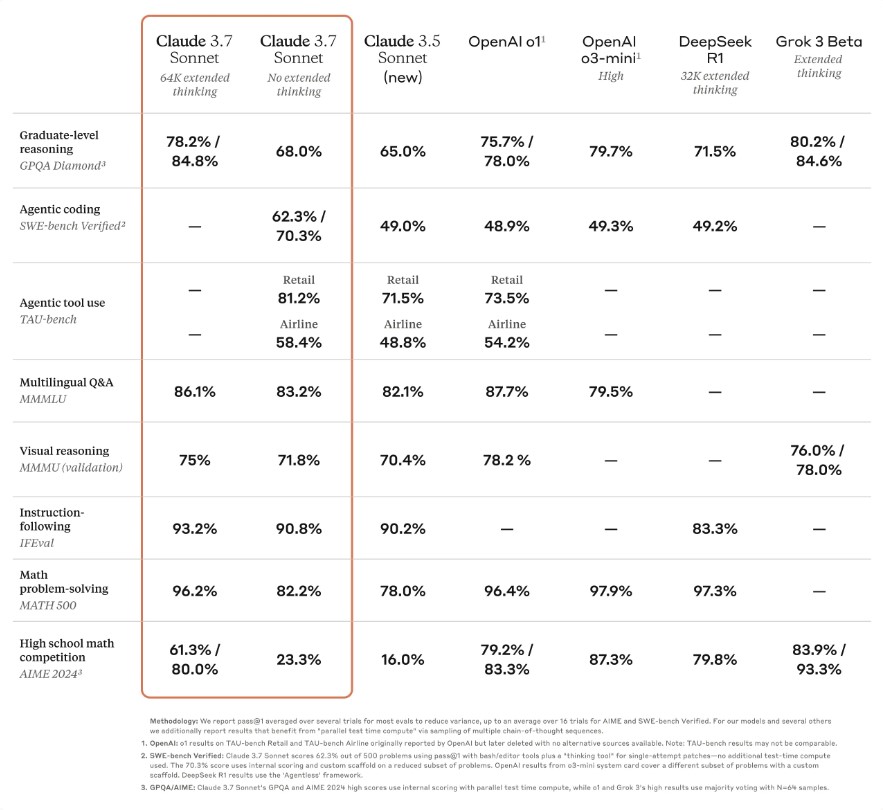

Claude 3.7 Sonnet is being marketed as the industry’s first “hybrid AI reasoning model,” combining quick responses with extended cognitive abilities in a single package. Anthropic asserts that users can toggle between standard and “extended thinking” modes, allowing the AI to adapt its response style based on the complexity of the task at hand. This flexibility, they argue, sets Claude 3.7 Sonnet apart from its predecessors and competitors.

However, as we’ve seen time and time again in the AI industry, revolutionary claims often fall short of real-world performance. While Anthropic’s approach is intriguing, it remains to be seen whether this hybrid model can truly deliver on its promises without compromising efficiency or accuracy.

The “Extended Thinking” Conundrum

At the heart of Claude 3.7 Sonnet’s purported innovation is its “extended thinking mode.” This feature allegedly allows the AI to engage in more in-depth reasoning when faced with complex tasks, breaking down complicated questions into smaller queries and systematically compiling responses. Anthropic claims this process is made visible to users, providing insight into the AI’s reasoning steps.

While this transparency is commendable, it’s worth questioning whether making the AI’s thought process visible truly enhances its capabilities or if it’s merely a flashy feature designed to instill confidence in users. After all, the ability to see how an AI arrives at its conclusions doesn’t necessarily guarantee the accuracy or relevance of those conclusions.

The “Thinking Budget” – A Real Innovation or Marketing Gimmick?

Anthropic has introduced a “thinking budget” feature, allowing developers to control how long the AI spends on a problem. This concept of trading speed and cost for answer quality is intriguing, but it also raises questions about the model’s efficiency. If an AI needs to be explicitly told how long to think about a problem, does that not indicate limitations in its ability to autonomously gauge the complexity of tasks?

Coding Capabilities: A Step Forward or Par for the Course?

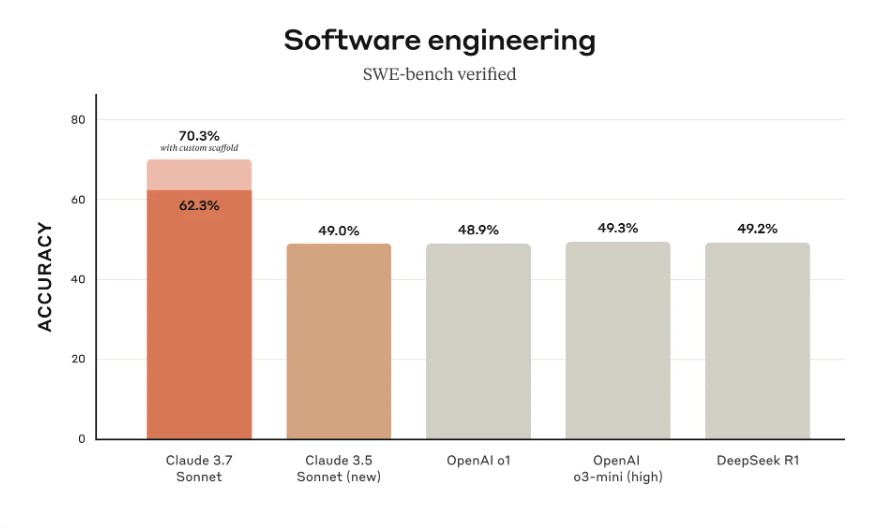

Claude 3.7 Sonnet boasts improved coding capabilities, including front-end tasks and a new command line tool called Claude Code. While early testing suggests impressive results, with Claude Code completing tasks in a single pass that would typically take 45+ minutes of manual work, it’s crucial to approach these claims with caution. The AI industry has a history of overpromising and underdelivering when it comes to coding assistance.

Market Position and Pricing

Anthropic is positioning Claude 3.7 Sonnet as a premium offering, with pricing that reflects its supposed advanced capabilities:

| Input Tokens | Output Tokens |

|---|---|

| $3 per million | $15 per million |

This pricing structure places Claude 3.7 Sonnet at a higher cost point than some competitors, such as OpenAI’s o3-mini and DeepSeek’s R1. Anthropic justifies this premium by emphasizing the model’s hybrid nature, but potential users will need to carefully weigh whether the added cost translates to tangible benefits in real-world applications.

The Broader Context

As we evaluate Claude 3.7 Sonnet’s potential impact, it’s essential to consider the broader context of AI development. Anthropic’s latest offering enters a market where companies like OpenAI, Google, and others are constantly pushing the boundaries of what AI can do. The introduction of a hybrid reasoning model reflects a growing trend in AI development to create more versatile and human-like AI assistants.

However, we’ve seen similar trends before, and the results have often been mixed. While AI has made significant strides in recent years, many of the most hyped advancements have failed to live up to their initial promise. It’s crucial to approach Claude 3.7 Sonnet with a healthy dose of skepticism, recognizing that true disruption in the AI field is often more evolutionary than revolutionary.

Looking Ahead

As Claude 3.7 Sonnet rolls out to users and developers, its true capabilities and limitations will become clearer. While Anthropic’s vision of an AI that can seamlessly switch between quick responses and deep reasoning is compelling, the real test will be in its practical applications across various industries and use cases.

For now, it’s prudent to view Claude 3.7 Sonnet as an interesting development in the ongoing evolution of AI technology, rather than an immediate game-changer. As with any new AI model, time and extensive real-world testing will be the ultimate judges of its value and impact on the industry.

In the fast-paced world of AI development, today’s groundbreaking innovation can quickly become tomorrow’s outdated technology. While Claude 3.7 Sonnet shows promise, it’s essential to remember that the next true AI disruptor may still be waiting in the wings, ready to surpass even the most advanced models of today.